Implementation trials aim to test the effects of implementation strategies on the adoption, integration or uptake of an evidence-based intervention within organisations or settings. Feasibility and pilot studies can assist with building and testing effective implementation strategies by helping to address uncertainties around design and methods, assessing potential implementation strategy effects and identifying potential causal mechanisms. This paper aims to provide broad guidance for the conduct of feasibility and pilot studies for implementation trials.

We convened a group with a mutual interest in the use of feasibility and pilot trials in implementation science including implementation and behavioural science experts and public health researchers. We conducted a literature review to identify existing recommendations for feasibility and pilot studies, as well as publications describing formative processes for implementation trials. In the absence of previous explicit guidance for the conduct of feasibility or pilot implementation trials specifically, we used the effectiveness-implementation hybrid trial design typology proposed by Curran and colleagues as a framework for conceptualising the application of feasibility and pilot testing of implementation interventions. We discuss and offer guidance regarding the aims, methods, design, measures, progression criteria and reporting for implementation feasibility and pilot studies.

This paper provides a resource for those undertaking preliminary work to enrich and inform larger scale implementation trials.

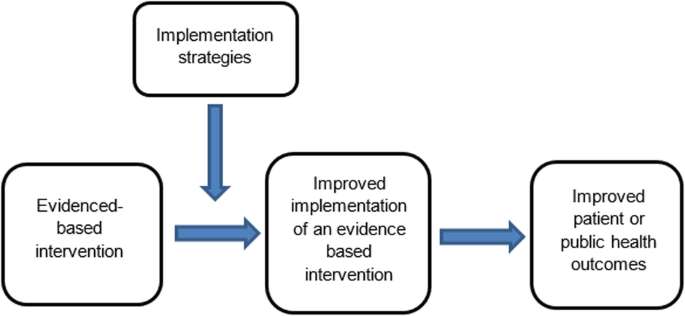

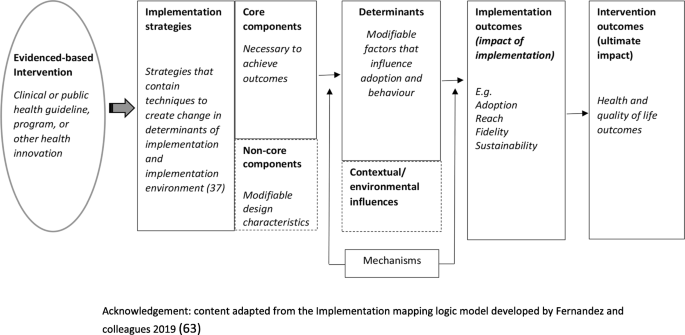

The failure to translate effective interventions for improving population and patient outcomes into policy and routine health service practice denies the community the benefits of investment in such research [1]. Improving the implementation of effective interventions has therefore been identified as a priority of health systems and research agencies internationally [2,3,4,5,6]. The increased emphasis on research translation has resulted in the rapid emergence of implementation science as a scientific discipline, with the goal of integrating effective medical and public health interventions into health care systems, policies and practice [1]. Implementation research aims to do this via the generation of new knowledge, including the evaluation of the effectiveness of implementation strategies [7]. The term “implementation strategies” is used to describe the methods or techniques (e.g. training, performance feedback, communities of practice) used to enhance the adoption, implementation and/or sustainability of evidence-based interventions (Fig. 1) [8, 9].

Definitions

Feasibility studies: an umbrella term used to describe any type of study relating to the preparation for a main study

Pilot studies: a subset of feasibility studies that specifically look at a design feature proposed for the main trial, whether in part or in full, conducted on a smaller scale [10]

While there has been a rapid increase in the number of implementation trials over the past decade, the quality of trials has been criticised, and the effects of the strategies for such trials on implementation, patient or public health outcomes have been modest [11,12,13]. To improve the likelihood of impact, factors that may impede intervention implementation should be considered during intervention development and across each phase of the research translation process [2]. Feasibility and pilot studies play an important role in improving the conduct and quality of a definitive randomised controlled trial (RCT) for both intervention and implementation trials [10]. For clinical or public health interventions, pilot and feasibility studies may serve to identify potential refinements to the intervention, address uncertainties around the feasibility of intervention trial methods, or test preliminary effects of the intervention [10]. In implementation research, feasibility and pilot studies perform the same functions as those for intervention trials, however with a focus on developing or refining implementation strategies, refining research methods for an implementation intervention trial, or undertake preliminary testing of implementation strategies [14, 15]. Despite this, reviews of implementation studies appear to suggest that few full implementation randomised controlled trials have undertaken feasibility and pilot work in advance of a larger trial [16].

A range of publications provides guidance for the conduct of feasibility and pilot studies for conventional clinical or public health efficacy trials including Guidance for Exploratory Studies of complex public health interventions [17] and the Consolidated Standards of Reporting Trials (CONSORT 2010) for Pilot and Feasibility trials [18]. However, given the differences between implementation trials and conventional clinical or public health efficacy trials, the field of implementation science has identified the need for nuanced guidance [14,15,16, 19, 20]. Specifically, unlike traditional feasibility and pilot studies that may include the preliminary testing of interventions on individual clinical or public health outcomes, implementation feasibility and pilot studies that explore strategies to improve intervention implementation often require assessing changes across multiple levels including individuals (e.g. service providers or clinicians) and organisational systems [21]. Due to the complexity of influencing behaviour change, the role of feasibility and pilot studies of implementation may also extend to identifying potential causal mechanisms of change and facilitate an iterative process of refining intervention strategies and optimising their impact [16, 17]. In addition, where conventional clinical or public health efficacy trials are typically conducted under controlled conditions and directed mostly by researchers, implementation trials are more pragmatic [15]. As is the case for well conducted effectiveness trials, implementation trials often require partnerships with end-users and at times, the prioritisation of end-user needs over methods (e.g. random assignment) that seek to maximise internal validity [15, 22]. These factors pose additional challenges for implementation researchers and underscore the need for guidance on conducting feasibility and pilot implementation studies.

Given the importance of feasibility and pilot studies in improving implementation strategies and the quality of full-scale trials of those implementation strategies, our aim is to provide practice guidance for those undertaking formative feasibility or pilot studies in the field of implementation science. Specifically, we seek to provide guidance pertaining to the three possible purposes of undertaking pilot and feasibility studies, namely (i) to inform implementation strategy development, (ii) to assess potential implementation strategy effects and (iii) to assess the feasibility of study methods.

A series of three facilitated group discussions were conducted with a group comprising of the 6 members from Canada, the U.S. and Australia (authors of the manuscript) that were mutually interested in the use of feasibility and pilot trials in implementation science. Members included international experts in implementation and behavioural science, public health and trial methods, and had considerable experience in conducting feasibility, pilot and/ or implementation trials. The group was responsible for developing the guidance document, including identification and synthesis of pertinent literature, and approving the final guidance.

To inform guidance development, a literature review was undertaken in electronic bibliographic databases and google, to identify and compile existing recommendations and guidelines for feasibility and pilot studies broadly. Through this process, we identified 30 such guidelines and recommendations relevant to our aim [2, 10, 14, 15, 17, 18, 23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45]. In addition, seminal methods and implementation science texts recommended by the group were examined. These included the CONSORT 2010 Statement: extension to randomised pilot and feasibility trials [18], the Medical Research Council’s framework for development and evaluation of randomised controlled trials for complex interventions to improve health [2], the National Institute of Health Research (NIHR) definitions [39] and the Quality Enhancement Research Initiative (QUERI) Implementation Guide [4]. A summary of feasibility and pilot study guidelines and recommendations, and that of seminal methods and implementation science texts, was compiled by two authors. This document served as the primary discussion document in meetings of the group. Additional targeted searches of the literature were undertaken in circumstances where the identified literature did not provide sufficient guidance. The manuscript was developed iteratively over 9 months via electronic circulation and comment by the group. Any differences in views between reviewers was discussed and resolved via consensus during scheduled international video-conference calls. All members of the group supported and approved the content of the final document.

The broad guidance provided is intended to be used as supplementary resources to existing seminal feasibility and pilot study resources. We used the definitions of feasibility and pilot studies as proposed by Eldridge and colleagues [10]. These definitions propose that any type of study relating to the preparation for a main study may be classified as a “feasibility study”, and that the term “pilot” study represents a subset of feasibility studies that specifically look at a design feature proposed for the main trial, whether in part of in full, that is being conducted on a smaller scale [10]. In addition, when referring to pilot studies, unless explicitly stated otherwise, we will primarily focus on pilot trials using a randomised design. We focus on randomised trials as such designs are the most common trial design in implementation research, and randomised designs may provide the most robust estimates of the potential effect of implementation strategies [46]. Those undertaking pilot studies that employ non-randomised designs need to interpret the guidance provided in this context. We acknowledge, however, that using randomised designs can prove particularly challenging in the field of implementation science, where research is often undertaken in real-world contexts with pragmatic constraints.

We used the effectiveness-implementation hybrid trial design typology proposed by Curran and colleagues as the framework for conceptualising the application of feasibility testing of implementation interventions [47]. The typology makes an explicit distinction between the purpose and methods of implementation and conventional clinical (or public health efficacy) trials. Specifically, the first two of the three hybrid designs may be relevant for implementation feasibility or pilot studies. Hybrid Type 1 trials are those designed to test the effectiveness of an intervention on clinical or public health outcomes (primary aim) while conducting a feasibility or pilot study for future implementation via observing and gathering information regarding implementation in a real-world setting/situation (secondary aim) [47]. Hybrid Type 2 trials involve the simultaneous testing of both the clinical intervention and the testing or feasibility of a formed implementation intervention/strategy as co-primary aims. For this design, “testing” is inclusive of pilot studies with an outcome measure and related hypothesis [47]. Hybrid Type 3 trials are definitive implementation trials designed to test the effectiveness of an implementation strategy whilst also collecting secondary outcome data on clinical or public health outcomes on a population of interest [47]. As the implementation aim of the trial is a definitively powered trial, it was not considered relevant to the conduct of feasibility and pilot studies in the field and will not be discussed.

Embedding of feasibility and pilot studies within Type 1 and Type 2 effectiveness-implementation hybrid trials has been recommended as an efficient way to increase the availability of information and evidence to accelerate the field of implementation science and the development and testing of implementation strategies [4]. However, implementation feasibility and pilot studies are also undertaken as stand-alone exploratory studies and do not include effectiveness measures in terms of the patient or public health outcomes. As such, in addition to discussing feasibility and pilot trials embedded in hybrid trial designs, we will also refer to stand-alone implementation feasibility and pilot studies.

An overview of guidance (aims, design, measures, sample size and power, progression criteria and reporting) for feasibility and pilot implementation studies can be found in Table 1.

Measures of implementation feasibility and pilot study methods are similar to those of conventional studies for clinical or public health interventions. For example, standard measures of study participation and thresholds for study attrition (e.g. >20%) rates [73] can be employed in implementation studies [67]. Previous studies have also surveyed study data collectors to assess the success of blinding strategies [74]. Researchers may also consider assessing participation or adherence to implementation data collection procedures, the comprehension of survey items, data management strategies or other measures of feasibility of study methods [15].

In effectiveness trials, power calculations and sample size decisions are primarily based on the detection of a clinically meaningful difference in measures of the effects of the intervention on the patient or public health outcomes such as behaviour, disease, symptomatology or functional outcomes [24]. In this context, the available study sample for implementation measures included in Hybrid Type 1 or 2 feasibility and pilot studies may be constrained by the sample and power calculations of the broader effectiveness trial in which they are embedded [47]. Nonetheless, a justification for the anticipated sample size for all implementation feasibility or pilot studies (hybrid or stand-alone) is recommended [18], to ensure that implementation measures and outcomes achieve sufficient estimates of precision to be useful. For Hybrid type 2 and relevant stand-alone implementation pilot studies, sample size calculations for implementation outcomes should seek to achieve adequate estimates of precision deemed sufficient to inform progression to a fully powered trial [18].

Stating progression criteria when reporting feasibility and pilot studies is recommended as part of the CONSORT 2010 extension to randomised pilot and feasibility trials guidelines [18]. Generally, it is recommended that progression criteria should be set a priori and be specific to the feasibility measures, components and/or outcomes assessed in the study [18]. While little guidance is available, ideas around suitable progression criteria include assessment of uncertainties around feasibility, meeting recruitment targets, cost-effectiveness and refining causal hypotheses to be tested in future trials [17]. When developing progression criteria, the use of guidelines is suggested rather than strict thresholds [18], in order to allow for appropriate interpretation and exploration of potential solutions, for example, the use of a traffic light system with varying levels of acceptability [17, 24]. For example, Thabane and colleagues recommend that, in general, the outcome of a pilot study can be one of the following: (i) stop—main study not feasible (red); (ii) continue, but modify protocol—feasible with modifications (yellow); (iii) continue without modifications, but monitor closely—feasible with close monitoring and (iv) continue without modifications (green) (44)p5.

As the goal of Hybrid Type 1 implementation component is usually formative, it may not be necessary to set additional progression criteria in terms of the implementation outcomes and measures examined. As Hybrid Type 2 trials test an intervention and can pilot an implementation strategy, criteria for these and non-hybrid pilot studies may set progression criteria based on evidence of potential effects but may also consider the feasibility of trial methods, service provider, organisational or patient (or community) acceptability, fit with organisational systems and cost-effectiveness [17]. In many instances, the progression of implementation pilot studies will often require the input and agreement of stakeholders [27]. As such, the establishment of progression criteria and the interpretation of pilot and feasibility study findings in the context of such criteria require stakeholder input [27].

As formal reporting guidelines do not exist for hybrid trial designs, we would recommend that feasibility and pilot studies as part of hybrid designs draw upon best practice recommendations from relevant reporting standards such as the CONSORT extension for randomised pilot and feasibility trials, the Standards for Reporting Implementation Studies (STaRI) guidelines and the Template for Intervention Description and Replication (TIDieR) guide as well as any other design relevant reporting standards [48, 50, 75]. These, and further reporting guidelines, specific to the particular research design chosen, can be accessed as part of the EQUATOR (Enhancing the QUAility and Transparency Of health Research) network—a repository for reporting guidance [76]. In addition, researchers should specify the type of implementation feasibility or pilot study being undertaken using accepted definitions. If applicable, specification and justification behind the choice of hybrid trial design should also be stated. In line with existing recommendations for reporting of implementation trials generally, reporting on the referent of outcomes (e.g. specifying if the measure in relation to the specific intervention or the implementation strategy) [62], is also particularly pertinent when reporting hybrid trial designs.

Concerns are often raised regarding the quality of implementation trials and their capacity to contribute to the collective evidence base [3]. Although there have been many recent developments in the standardisation of guidance for implementation trials, information on the conduct of feasibility and pilot studies for implementation interventions remains limited, potentially contributing to a lack of exploratory work in this area and a limited evidence base to inform effective implementation intervention design and conduct [15]. To address this, we synthesised the existing literature and provide commentary and guidance for the conduct of implementation feasibility and pilot studies. To our knowledge, this work is the first to do so and is an important first step to the development of standardised guidelines for implementation-related feasibility and pilot studies.